Exploring the Spread of Artificial Intelligence, from Climate Models to Christmas Jingles

Two webcasts for you this week! As AI explodes, what does HI (human intelligence) think, and do?

UPDATED - I hosted two very different Sustain What discussions on the uses and implications, for better and worse, of rapidly-advancing digital tools that sift, learn, adapt and create - in other words, artificial intelligence.

Here you’ll meet Evan Greer, a transgender and online-rights campaigner who co-wrote some edgy Christmas tunes with the popular ChatGPS chatbot. The poet Andrei Codrescu stopped in to critique and recite (reluctantly) a chatbot-created “poem in the style of Andrei Codrescu.

Below we explore how AI can improve climate models. Quite a range!

In recent years, the list of established and potential applications for artificial intelligence has absolutely exploded, from predictive medicine to mass surveillance, from filling mundane jobs to teaching, from finance to, gulp, blogging.

Recent weeks have seen a burst of amusement, astonishment and unease around OpenAI’s temporarily-free release on November 30 of the ChatGPT chatbot, which the company called:

“the latest step in OpenAI’s iterative deployment of increasingly safe and useful AI systems. Many lessons from deployment of earlier models like GPT-3 and Codex have informed the safety mitigations in place for this release, including substantial reductions in harmful and untruthful outputs achieved by the use of reinforcement learning from human feedback (RLHF).”

More than a million people ran ChatGPT through its paces in just the first week.

At the same time, it’s clear the world is nowhere near ready to regulate or create standards for these technologies. For a concise summary, read this Forbes column drawing on the findings of a batch of scholars and experts who convened for a “Transatlantic Dialogue on Humanity and AI Regulation” in May in Paris: Regulating Artificial Intelligence – Is Global Consensus Possible?

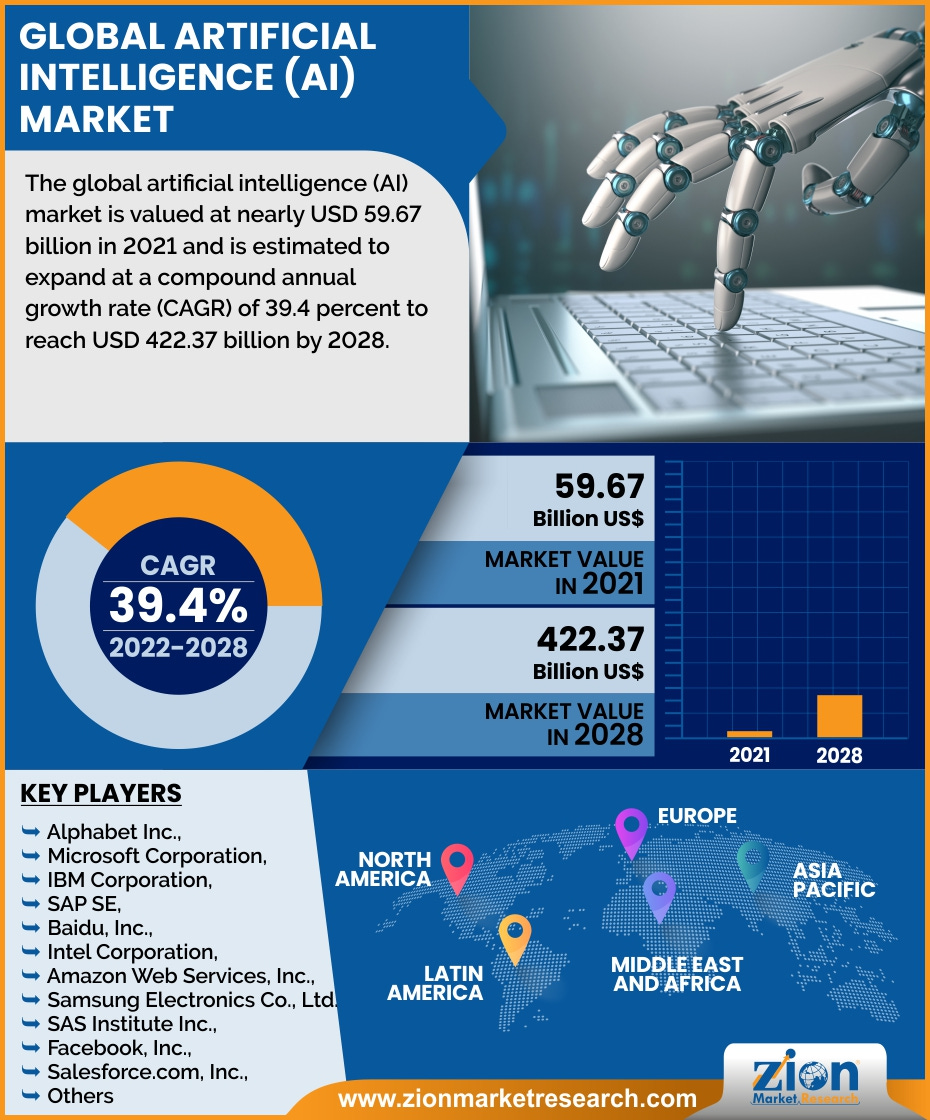

A $422-billion per year market value by 2028

AI is quickly penetrating almost every part of the global economy. Never rely on a single projection, but even if last May’s market report from Zion Market Research is way off, its conclusions are still stunning:

The global artificial intelligence (AI) market is valued at nearly USD 59.67 billion in 2021 and is estimated to expand at a compound annual growth rate (CAGR) of 39.4 percent to reach USD 422.37 billion by 2028.

A.I. lyrics and jingling bells

I hosted something of a “singalong” exploration of the turbulent online media environment and AI with Evan Greer, a self-described queer indie-punk singer-songwriter and digital-rights and LGBTQ activist and commentator. Greer is deputy director of the nonprofit Fight for the Future, which campaigns against oppressive abuses of technology and for an open Internet.

I couldn’t resist reaching out when I saw that Greer just released a four-song EP, “Automated Christmas Joy,” with lyrics written by the OpenAI Chat GPT tool to prompts like “Write a song called ‘Christmas at the Gay Bar’” and “Write a Christmas song where Santa fights the fascists.” (Proceeds from downloads will support the nonprofit group.)

Greer explained the project in a press release:

“There are some really funny moments where you can see the AI totally failing and succeeding at the same time, like when it spits out a line about ‘Santa’s mighty sack.’ It was also pretty dystopian and weird how when I asked it to write a song about Christmas at the gay bar, the AI clearly returned results informed by the recent wave of violence against LGBTQ+ people. I’m genuinely not sure what kind of impact this technology is going to have on our society. It scares me, but also I kinda can’t look away. In the end, this is why we need regulation that protects vulnerable people and prohibits harmful uses of AI. Fight for the Future does that work all year round, so I decided if I was going to release this monstrosity, I’d use it to raise funds for our work around AI regulation.”

We also talked about Twitter trouble (read Greer’s Time commentary on the Musk effect), federal oversight of online communication and social media alternatives. Greer wrote a very helpful Twitter thread on how to move to Mastodon. (And if you missed it, do watch my Sustain What session on making the most of that platform.)

A.I. for climate impact

On Thursday December 22 at noon Eastern time, I hosted a Sustain What conversation on cutting-edge efforts at Columbia University and elsewhere to apply machine learning and other data science to computer modeling in ways that could provide clearer predictions of climate change dynamics to communities and companies in the next few decades.

Computer climate simulations have greatly increased basic understanding of human-driven global warming, as I’ve written for three decades. But enduring gaps in climate science and models have hindered the ability to use these tools to forecast and adapt to shifting hazards in the next few decades.

LEAP, the Center for Learning the Earth with Artificial Intelligence and Physics, is a five-year quest for breakthroughs. Headquartered at Columbia University and funded by the National Science Foundation, the center is fostering convergence in climate science, A.I. and data science to improve model results and building two-way connections with stakeholders and communities at risk to put that knowledge to use.

In this Sustain What webcast, I explored issues and opportunities with scientists from this team and a climate scientist from the Allen Institute for Artificial Intelligence.

Click here for the details: “Can Climate Models Aid Adaptation Efforts with Help from A.I.?” You can also watch and share the webcast on Facebook or LinkedIn.

My guests were:

Pierre Gentine, LEAP director, is professor of earth and environmental engineering as well as earth and environmental sciences at Columbia University. He studies the terrestrial water and carbon cycles and their changes with climate change.

Galen McKinley, LEAP deputy director, is a Columbia University professor of earth and environmental sciences and environmental engineering focused on the interplay of ocean, carbon cycle and climate science.

Kara Lamb, LEAP scientist, is an associate research scientist in Columbia’s earth and environmental engineering department. With Department of Energy funding, she is using machine learning and other data science to clarify the microphysics of cirrus clouds. Read this recent State of the Planet feature on her work probing ice crystal formation.

Oliver Watt-Meyer is a senior research scientist in the climate modeling group at the Allen Institute for AI.

Chad Small, a graduate student in atmospheric sciences at the University of Washington, is also a science journalist. Small just wrote an article about climate model questions and solutions for the Bulletin of the Atomic Scientists:

Your turn

I’d love to know if you’ve tried out the ChatGPT bot. If so, please post a comment below with your experience and reactions.

Parting bot

A decade ago, I became fascinated and unnerved by an experiment run by doctoral students Igor Labutov and Jason Yosinski and their professor, Hod Lipson, at the Cornell Creative Machines Lab.

They essentially got two audio chatbots talking to each other. I periodically have an end-times dream in which some extraterrestrial intelligence makes it to Earth someday and all that’s left is these two chatbots, solar powered, talking gibberish to each other.

Have a nice evening!

Postscript

Evan Greer’s experiment prompted me to commission a ChatGPT song, as well. My prompt: "Write a country song about Vladimir Putin's dreams for the world." The instant result is in this Twitter thread: