The Pandemic Was Predicted. So What?

Entering the fourth year of a continuing global health emergency, what lessons remain unlearned about acting on scientific warnings?

Conversations with intelligence veterans in the first weeks of the pandemic remain invaluable in weighing how to go beyond identifying threats to acting on them facing a "polycrisis."

I’m recirculating two Sustain What conversations from the early weeks of the COVID-19 pandemic as we enter the fourth year since the World Health Organization declared a global health emergency. Here’s a key moment:

The discussions, with longtime contacts who are global-risk and intelligence-agency veterans, are enduringly relevant given how realtime political and societal issues continue to distract from, or distort, analysis of looming threats in a multi-dimensional risk landscape.

I was spurred to post after catching up today with journalist Vince Chadwick’s Davos street interview with Adam Tooze on the concept of “polycrisis.”

What puzzled Tooze, a Columbia history professor with a wide-view lens on global risk and responses, was that the pandemic “has slipped off the agenda precisely at a moment when more people than ever before are becoming infected more rapidly than ever before in China.”

The purpose of the pandemic concept, he says, “is to capture the way in which we get blindsided - we don’t see the thing that’s going to hit us. And here again, hiding in plain sight, is this major risk factor and it’s just not getting the attention either in the global discussion or the outlook for the world economy for 2023, let alone an actual investment in resource into the broad-band immunizations that were the talk of the town back in 2021…. Whereas we know, to this day, over 3 billion people worldwide didn’t even just get one shot in their arm.”

I saw Chadwick’s video clip via a tweet from the indispensible Philip Schellekens, a World Bank development economist whose visualizations of the deadly and enduring global vaccination gap inspired a Sustain What post a year ago.

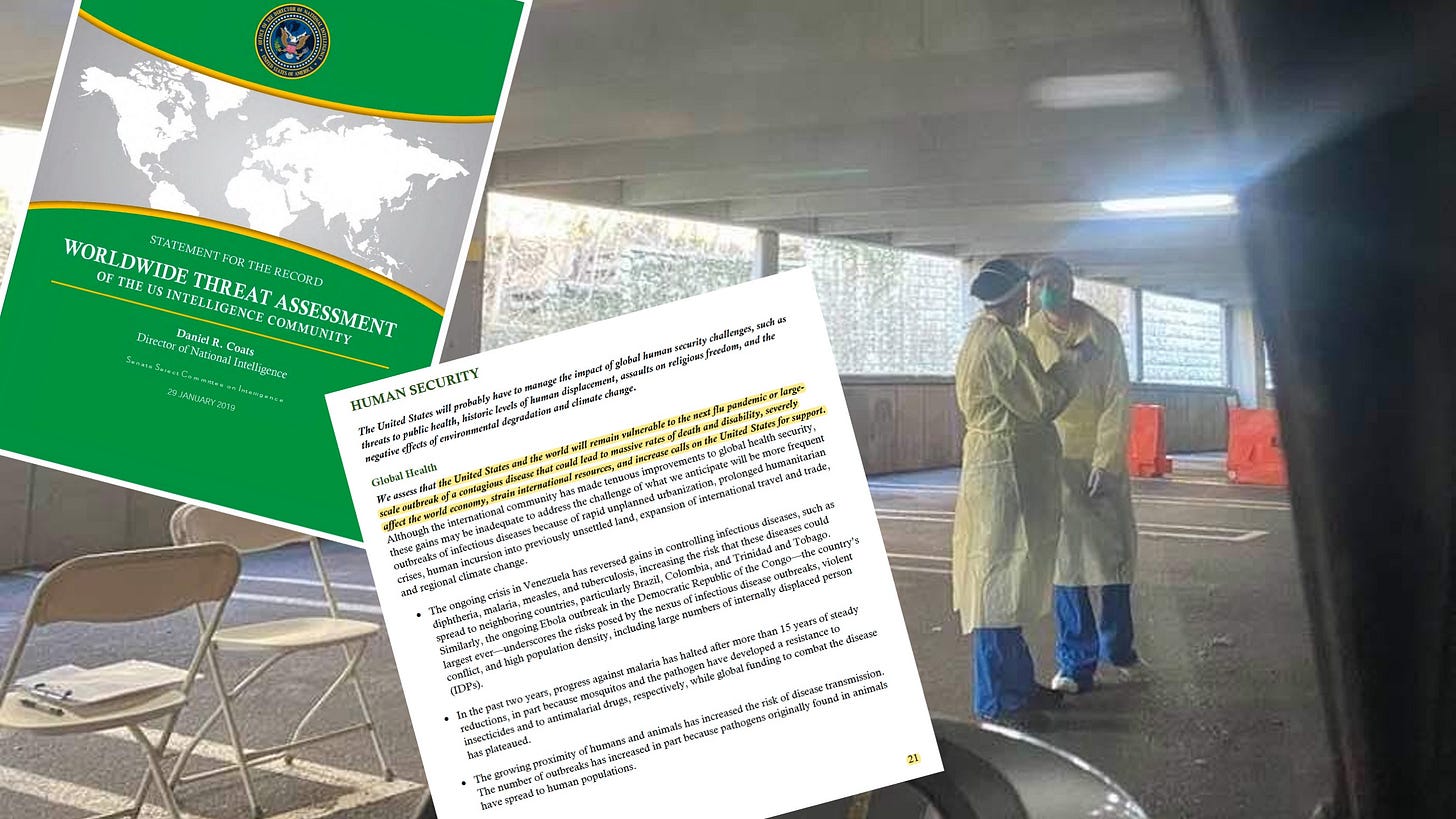

Overcoming the “page 21” effect

On March 15, 2020, my first pandemic webcast with former senior intelligence and national security officers was spurred by headlines noting that intelligence reports provided to the Trump White House had laid out the likelihood of a pandemic with unnerving clarity - and one even noted worrisome signs of a rapidly spreading virus in Wuhan in November.

We weren’t in blame casting mode. We were aiming to figure out what could be improved in how intelligence analysis is undertaken, and even in how the concept of nationa security is framed.

My guests were Geoff Dabelko, an Ohio University professor focused on global risk and foreign policy, Alice C. Hill, a former Obama-administration National Security Council official focused on climate, health and resilience who is now at the Council on Foreign Relations, and Rod Schoonover, an intelligence analyst focused on environment-related risks who famously quit in July 2019 after the Trump White House tried to limit written his written congressional testimony on climate change.

The challenge for that White House or any other is not awareness as much as prioritization, he told us:

“In my world in the intelligence community, I was often very proud to be in one of the only parts of the government that either had the platform or the freedom to clearly state some of the risks. In the 2019 Worldwide Threat Assessment, it lays out language that’s very, very, eerily prescient of this moment. But it also landed on, I think, page 21. So, yes, it’s a risk, but we clearly don’t have it calibrated quite right.”

Indeed, the pandemic warning was on page 21.

That statement has stuck with me ever since as emblematic of a deep challenge for all of us - not just listing threats by finding a way to prioritize and act on them in ways that limit regrets but don’t waste effort.

Have you come up with, or seen, appraoches to this quandary? Please weigh in!

Here’s that full discussion. You can also watch and share on Facebook.

I dug in deeper with Schoonover and Hill a couple of weeks later.

That conversation is also available as an audio podcast here on Substack:

Postscript: Where I mention Rob Socolow’s description of big, but uncertain, climate risks as “monsters behind the door,” I meant his Princeton colleague Steve Pacala.

Ok Andy, you got me, had to go paid to weigh in on this one.... :-)

When it comes to prioritizing threats, there is a larger issue here which should be addressed, because it affects pretty much all issues. We should be wary of counting on the expert class to prioritize threats because they have business agendas which compete with a purely objective analysis.

The expert class are overwhelmingly very intelligent, well educated people with good intentions. There's no problem with their qualifications or morality.

The objectivity obstacle is that they make their living being experts. The problem is money. The experts have spouses, mortgages, children in college, parents to care for etc. And like any of us, these family responsibilities will be prioritized over "the world", as they should be. Thus, business agendas will triumph over intellectual agendas whenever there is a conflict between the two.

Experts are business people selling a product, information and analysis. And you can't sell a product that buyers feel they already have. And so for the expert the business model is to try to sell analysis that their buyers experience as being new, something they don't already have. This business requirement to provide new analysis is a form of bias, a distorting factor. And the impact of this distorting factor is amplified by their expert status. And so we see phenomena like this....

As bad as the pandemic has been and continues to be, it's NEVER going to collapse modern civilization in an hour. And this particular pandemic at least shows no sign of ever being able to collapse the modern world. And thus, as an act of objective reason, our concern about the pandemic should be prioritized way below that of nuclear weapons, which can destroy everything almost instantly.

The problem for experts is that they can't make a living saying that nuclear weapons are a dire threat, because their customers already know that, or at least think they do.

And so for example, we see many experts running as fast as they can towards topics like AI, because that's new territory, new information to be sold, a new product line, a new business opportunity. The problem is that these business agendas don't necessarily align with the threat environment. So for example, while AI is a great business opportunity for experts, it doesn't begin to compare to nuclear weapons as a threat, at least at the present time.

A perhaps even more dangerous form of bias experienced by experts is their relationship with the group consensus. Any intellectual elite who is dependent upon a pay check can only explore so far beyond the boundaries of conventional group consensus thinking. If an experts goes farther than those boundaries the group whom they depend on will stop thinking of them as experts, and rebrand them as crackpots. And to an expert, reputation is everything, so such a rebranding presents an existential threat to their careers and must be avoided at all costs.

If conventional group consensus thinking can meet a challenge, then there is no problem. But if conventional group consensus thinking could defeat a problem, that problem would likely already be solved. And so, the most promising territory for solutions often lies in those set of ideas generally considered to be unrealistic, unreasonable, unworkable etc. Experts typically have a very limited ability to explore this territory.

Finally, what many experts are really expert at is the art of creating the image of being an expert. Think of this as branding expertise. It's a useful skill for sure, but it has pretty much nothing to do with threat analysis, prioritization of threats, or intellectual inquiry. Branding is a business skill, not an intellectual skill.

Here's more evidence. For 75 years the nuclear weapons community has been attempting to meet the nuclear challenge with information, analysis and consciousness raising. And after 75 years there is no credible evidence these approaches will ever work, as much as we wish they would.

But the nuclear weapons experts are locked in to the failed status quo. They've built careers providing information, analysis and consciousness raising, and so they can't really admit that how they make their living has proven to be a waste of time.

One alternative would be for the scientific community to stage a series of strikes, to replace information, analysis and consciousness raising with leverage. But we're unlikely to see such a new approach, because going out on strike is not good for one's career.

The point here is NOT that experts are bad people, for there is no evidence of that. The point is that experts are not really in a position to do the kind of bold creative thinking that the challenges presented by the modern world require.