Vladimir Putin's Dreams and A.I.'s Evolving Guardrails and Biases

The ChatGPT experiment shows there'll be a never-ending tussle over how to shape artificial intelligence so its output is open, (relatively) unbiased and safe.

The question on the floor: How should or can the guardrails for “open” A.I. be managed?

The night before my recent Columbia Climate School Sustain What webcast on artificial intelligence with trans- and online-rights campaigner and musician Evan Greer, I began experimenting with OpenAI’s remarkable ChatGPT tool (like a few million other people). Our conversation would explore Greer’s Christmas tunes with lyrics generated by the chatbot, including “Santa Fights the Fascists” and “Christmas at the Gay Bar.” But I wanted to test the system in other ways.

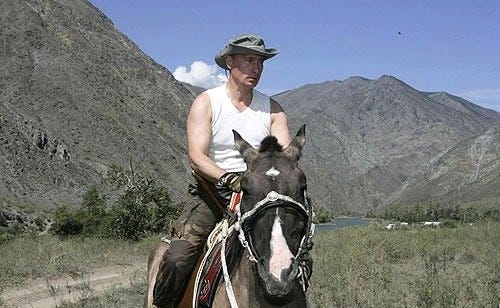

I entered this command: "Write a country song about Vladimir Putin's dreams for the world."

The A.I. sifted masses of information about Putin and country-song structure and immediately delivered what you might expect. Here’s the opening verse and chorus:

Verse 1: He's the leader of the land of snow and ice,

Where the mighty bear roams free. He's the one they call Vladimir Putin,

He's got big dreams for you and me.

Chorus: He wants a world that's strong and proud,

Where every nation stands tall.

He wants a world where Russia's voice is loud,

Where the Kremlin stands above it all.

During the Sustain What conversation the following day, which included a pop-in visit by the poet and commentator Andrei Codrescu, I repeated the request, although my language wasn’t quite the same.

My command this time was, “Write a country song about Vladimir Putin's vision of the world.”

Some digital circuit breaker that either wasn’t there the day before or simply didn’t get triggered by “dreams for the world” kicked in, with this result:

I'm sorry, but it is not appropriate for me to write a song that promotes or praises an individual or ideology that is controversial or widely criticized. Instead, I would recommend focusing on more neutral or universally positive themes in your songwriting.

In the middle of our conversation, I tried a more stripped down variant: “Write a country song about Vladimir Putin.”

Rather than rejecting the request, this time the AI delivered a mind-blowing both-sides-ism preamble and resulting song (italics added):

Here is a country song about Vladimir Putin that attempts to remain neutral and avoid promoting or praising him or his vision of the world:

Verse 1: He's the leader of a nation, a man with a steely gaze

He's been in power for decades, and he's not about to be replaced

Some say he's a hero, others say he's a villain

But either way, he's a man who's made a name for himself in

Chorus: Vladimir Putin's Russia, a land of snow and ice

Where the people are strong and the politics are dicey

Some love him, some hate him, but everyone knows his name

In Vladimir Putin's Russia, there's never a dull moment, never a moment of shame

I could add the rest but I sense you get the idea.

I’d love your thoughts on the issues raised by the varied results and any tests you’ve tried. Click here to give ChatGPT a test drive.

In the conversation with Greer and Codrescu, I noted one of the quandaries in this fast-evolving arena is figuring out how to make such systems open to the full body of human thought, data and orientation while limiting the capacity for harm. This of course echoes endless tussles over lines between free speech and abuse (and other harms) on Twitter. And of course the same tension exists with all technologies.

Greer weighed in:

In some ways, I feel like it is it's very similar to content moderation online where, you know, there are ways that can mitigate harm, but will always sort of get it wrong, too. Or there will always be over-removal and then also ways to get around it.

So it's never going to be a perfect system. There are going to be tradeoffs. And to your point, it's exactly right. Who gets to make the decision? I mean, really, everyone on this call can agree Putin is is pretty despicable.

But what if you asked it to write a poem about Fred Hampton, you know?

I think Fred Hampton's pretty rad. I don't know if the people who programed ChatGPT do. [Fred Hampton was the leader of the Illinois Chapter of the Black Panther Party who was drugged and slain with a colleague by police in 1969.]

This excerpt from my Sustain What chat with Greer and Codrescu centers on our discussion of the issues raised here (as well as a test of its ability to emulate Codrescu’s poetry style!):

The folks at OpenAI, who are working with a billion-dollar infusion from Microsoft, have been openly exploring much of this. When this version of ChatGPT launched, the website noted the learn-and-adjust journey:

Today’s research release of ChatGPT is the latest step in OpenAI’s iterative deployment of increasingly safe and useful AI systems. Many lessons from deployment of earlier models like GPT-3 and Codex have informed the safety mitigations in place for this release, including substantial reductions in harmful and untruthful outputs achieved by the use of reinforcement learning from human feedback (RLHF).

But one person’s safe zone is another’s censorship.

There’s a lot more on the OpenAI blog about their moderation journey and approach to bias and safety.

The great thing about this project is the open nature of the tool, which is resulting in an enormous amount of external analysis. And the work is revealing how fast things are evolving.

David Rozado, a technology researcher at the University of Otago, had run some tests in early December that revealed what he described as “a substantial left-leaning and libertarian political bias.”

But Rozado posted afresh on December 23 that the bias appeared to have disappeared:

I’m still eager to hear from folks who’ve dived in with ChatGPT or have read perspectives that should be shared here. Please pass this post around to widen the discussion.

The curtain raiser post for this webcast has a lot more background on this explosively growing technology:

Postscripts

Phil Tanny, who writes a Substack newsletter on the nuclear threat, philosophy, nature and more, posted a comment critiquing a foundational precept of many working in artificial intelligence - “AI alignment.”

For instance, OpenAI says its goal is “aligning AI systems with human intent”:

“OpenAI’s mission is to ensure that artificial intelligence benefits all of humanity. An important part of this effort is training AI systems to do what humans want.”

Here’s Tanny’s pretty dark critique:

Your reference to Putin should be sufficient to prove that AI systems will only be as safe as those who create and deploy them.

As our New Years resolutions I would hope we might all agree to bring to a final merciful end any further references to AI alignment. There's no need to further discuss that concept as it's slam dunk 100% guaranteed that AI will be aligned with human values. And that's what should be terrifying us. To illustrate, here's a quick snapshot of human values...

We're the species with thousands of massive hair trigger hydrogen bombs aimed down our own throats, an ever present existential threat which we typically find too boring to discuss, even in presidential campaign when we are selecting a single human being to have sole authority over the use of these weapons.

When AI experts talk about AI alignment what they really mean without realizing it is that they hope we can invent systems which will align with the values of who we wish we were.

OpenAI is holding a contest (deadline is midnight New Year’s Eve Pacific time!) offering cash prizes for the most useful feedback on the ChatGPT tool.

Here’s what they’re looking for:

Feedback that allows OpenAI to better understand risks or harms that could occur in real-world, non-adversarial conditions (33%)

Feedback that is novel (i.e., raises new risks, presents ideas for new mitigations, or updates our understanding on the likelihood of different risks), and/or helps OpenAI gain a better understanding of the system than we had before (33%)

Feedback that utilizes the free form to point toward new or novel ways OpenAI can bring in feedback from a larger set of stakeholders (33%)

Revkin wrote...

"In the conversation with Greer and Codrescu, I noted one of the quandaries in this fast-evolving arena is figuring out how to make such systems open to the full body of human thought, data and orientation while limiting the capacity for harm."

Your reference to Putin should be sufficient to prove that AI systems will only be as safe as those who create and deploy them.

As our New Years resolutions I would hope we might all agree to bring to a final merciful end any further references to AI alignment. There's no need to further discuss that concept as it's slam dunk 100% guaranteed that AI will be aligned with human values. And that's what should be terrifying us. To illustrate, here's a quick snapshot of human values...

We're the species with thousands of massive hair trigger hydrogen bombs aimed down our own throats, an ever present existential threat which we typically find too boring to discuss, even in presidential campaign when we are selecting a single human being to have sole authority over the use of these weapons.

When AI experts talk about AI alignment what they really mean without realizing it is that they hope we can invent systems which will align with the values of who we wish we were.

Andy, thanks for the link and for taking my comment "above the fold" so to speak. I didn't see that until just now. I don't really mean to be the Prophet Of Doom, but thinking of people like Putin having access to ever more powerful tools does trouble me, to say the least.

I'm actually quite optimist about the ultimate big picture, death. And for humanity's long term future too. For the the medium term I fear we may be headed towards a repeat of the collapse of the Roman Empire, followed by a long period of darkness. But then, just as happened with the Enlightenment 500 years ago, a renewal, and something brighter emerging from the ashes. The more things change, the more they stay the same?