I'm With the Experts Warning that A.I.'s Potential for Huge Harms Needs Far More Attention

There's an Intergovernmental Panel on Climate Change, but nothing like it for other sources of planet-scale, society-wide risk - like AI. That should change.

I was among the first 100 people to sign onto the 22-word note of concern about artificial intelligence that was drafted by top researchers and developers in this explosively emergent component of Earth’s “technosphere” and released today.

I explain why below and hope to hear your views in the comments.

The wording of the statement, developed by the Center for AI Safety, is intentionally general and meant to provoke reactions:

“Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.”

The idea for a single-sentence statement came from David Scott Krueger, a University of Cambridge researcher focused on aligning AI with human needs (you’ll see the term “alignment” a lot in this realm).

Signatories range from founders of OpenAI, Google Deepmind, Anthropic and other A.I. labs to Martin Rees, the astrophysicist and co-founder of the Center for the Study of Existential Risk at the University of Cambridge. I’m planning a Sustain What conversation or two with those supporting the statement - and others who don’t.

The wrangling of signatories has been managed by Dan Hendrycks, the director of the Center for AI Safety and author of a host of relevant papers, including the pithily titled “Natural Selection Favors AIs over Humans.” I sense this line will get your attention:

Competitive pressures among corporations and militaries will give rise to AI agents that automate human roles, deceive others, and gain power. If such agents have intelligence that exceeds that of humans, this could lead to humanity losing control of its future.

Hendrycks’s Center has built a course in Machine Learning Safety with one video module on AI and Evolution. Here’s a slide and statement to ponder: “AI may be callous towards other organisms (including humans), just as other organisms in nature are manipulative, deceptive, or violent”:

Why did I sign?

I signed because one doesn’t have to dive into the still-speculative realm of untethered superintelligence to get to the reality that these technologies pose underappreciated disruptive dangers even now.

Sure, you could say I’m a worrywart. After all, I wrote in depth for The Times about the Y2K computer breakdown threat, which likely was an overly hyped juncture in technology history. But I’m convinced this time really is different.

One practical reason for deep concern emerges when considering what’s possible outside the realm of academic and corporate computer scientists and related experts who’ve tried, like this group, to keep AI aligned with Stephen Pinker’s “better angels of our nature.”

Read this excerpt from “China Is Flirting With AI Catastrophe,” a Foreign Affairs commentary by Bill Drexel and Hannah Kelley of the Center for a New American Security, to consider near-term perils posed where AI intersects with realms in which disregard for general human welfare is the norm:

When drug researchers used AI to develop 40,000 potential biochemical weapons in less than six hours last year, they demonstrated how relatively simple AI systems can be easily adjusted to devastating effect. Sophisticated AI-powered cyberattacks could likewise go haywire, indiscriminately derailing critical systems that societies depend on, not unlike the infamous NotPetya attack, which Russia launched against Ukraine in 2017 but eventually infected computers across the globe. Despite these warning signs, AI technology continues to advance at breakneck speed, causing the safety risks to multiply faster than solutions can be created.

There’ve been calls for establishment of an oversight organization like the International Atomic Energy Agency, chartered in the late 1950s to help ensure atomic power was used for peace. It’s vital to understand that the IAEA only operates within the context of a wide array of international treaties. This isn’t about establishing a bureaucracy nearly as much as it is about establishing a sustained dialogue rooted in the need for deep transparency and accountability.

When I think about treaties and scientific and technical advice, I do think about the best aspects of the Intergovernmental Panel on Climate Change. There isn’t a similar entity advising the world’s nations on the hazards, risks and solutions attending explosive changes in Earth’s information environment. Perhaps there should be.

AI and End Times

At the highest level of risk - where human intelligence hits a ceiling through which machine learning can easily pass - there’s plenty to consider.

I’ve long been, and remain, a technological optimist, but always with caveats. When I was invited to speak at the 2014 TEDx Portland event on the idea of perfection, I stated that humans are actually perfect for the task of navigating a complex century of intertwined risk and opportunity - but with a big asterisk. Watch here.

There’s a fantastic up side to the global connectivity afforded by the Internet and explosive expansion of remote sensing and other data sources revealing human and environmental dynamics - and computational capacity.

The asterisk relates to the human species’ layered brain, with our lizard brain and high-level cortex messily interlinked. Humanity’s beauty and dark side both emerge from that nexus.

Manolis Kellis at MIT laid this out beautifully in a recent conversation with Lex Fridman (Fridman also signed the statement). I know most newsletter readers don’t click links but do hope you play at least some of this remarkable discussion.

The human race - with ourselves

When I launched my New York Times blog in 2007, I proposed we’re in a race between potency and awareness - of both the larger environmental impacts of our actions and of human perceptual weaknesses. The whiteboard sketches I did with blog designer Jeremy Zilar before we launched convey this.

I sensed much merit in the cautionary comment made by Cardinal Óscar Andrés Rodríguez Maradiaga in 2014, on the first day of the Vatican meeting on Sustainable Humanity, Sustainable Nature, Our Responsibility” at which I was a respondent. As you may recall from an earlier most Maradiaga said this:

“Nowadays man finds himself to be a technical giant and an ethical child.”

And that was before CRISPR-cas9 genome editing and ChatGPT-4.

So what do you think?

Hype or horror?

Weigh in.

Learn more

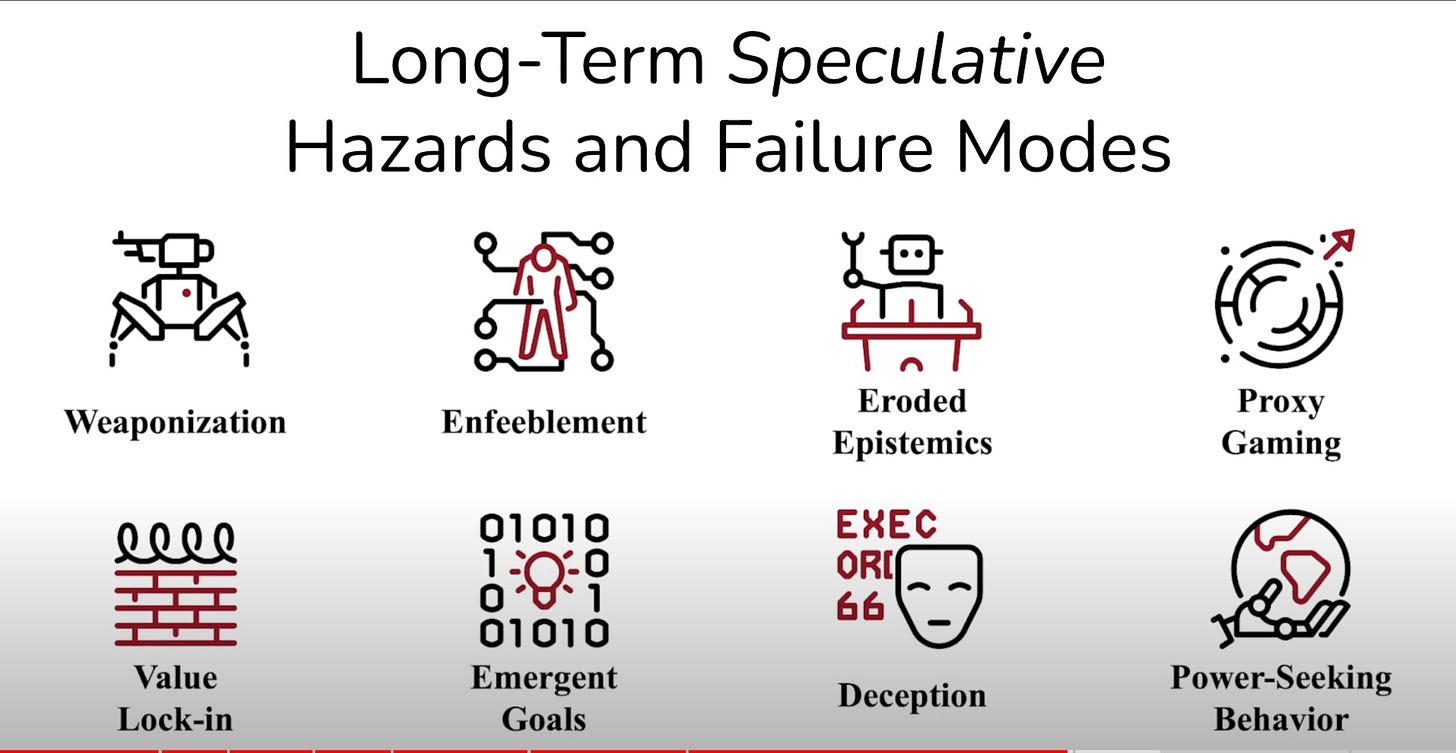

Here’s the introductory video in the online tutorial on Machine Learning Safety - including this slide on potential hazards and “failure modes”:

Also read “The Governance of Superintelligence,” by the co-founders of OpenAI, Sam Altman, Greg Brockman and Ilya Sutskever.

::::Exploring the Spread of Artificial Intelligence, from Climate Models to Christmas Jingles

UPDATED - I hosted two very different Sustain What discussions on the uses and implications, for better and worse, of rapidly-advancing digital tools that sift, learn, adapt and create - in other words, artificial intelligence. One featured Evan Greer

Had a power cut the other day, night, and half day till it was fixed. Lost power to all power-points in the living area. It was a good reminder how dependent technology is on an uninterrupted power supply as I was unable to recharge both my laptop and mobile phone for 30 hours, as well as fridge and freezer defrosting. That powercut happened because one powerpoint short-circuited, causing the whole connected circuit to drop out. One level of control.

To connect my laptop to the internet I need ... power supplied to my house, a modem that also needs that power, the modem to connect into the broadband connection at the curb, glass fiber cable underground, some kind of mix-and-match station where all the cables meet, and so on. I only mentioned five levels of control there, all places where the connection can be lost. Power supply is a fragile commodity.

How many levels of control are needed to keep a large AI installation going, I wonder? And I wonder whether they could be limited in their output by limiting their power supply. Give them a system isolated from the net and make them ration themselves.

Or we can wait for an ice storm to do the job for us.