Updated post-show: I hope you’ll listen to my conversation with tech lawyer, journalist and now novelist

to explore his Wikipedia reporting, his novel and the tussle between Elon Musk and the nonprofit independent knowledge gateway. Watch on X/Twitter, Facebook, LinkedIn and YouTube.Wikipedia is an inspiring digital example of cathedral building - the process of building a structure that each generation knows is the work of generations. This nonprofit platform has matured into an astonishing realtime summary of human knowledge since its founding back in January 2001. Sure it’s imperfect, like the species that is building it. (Read the Wikipedia entries on Why Wikipedia is So Great and Why Wikipedia is Not So Great.) But it deserves widespread support.

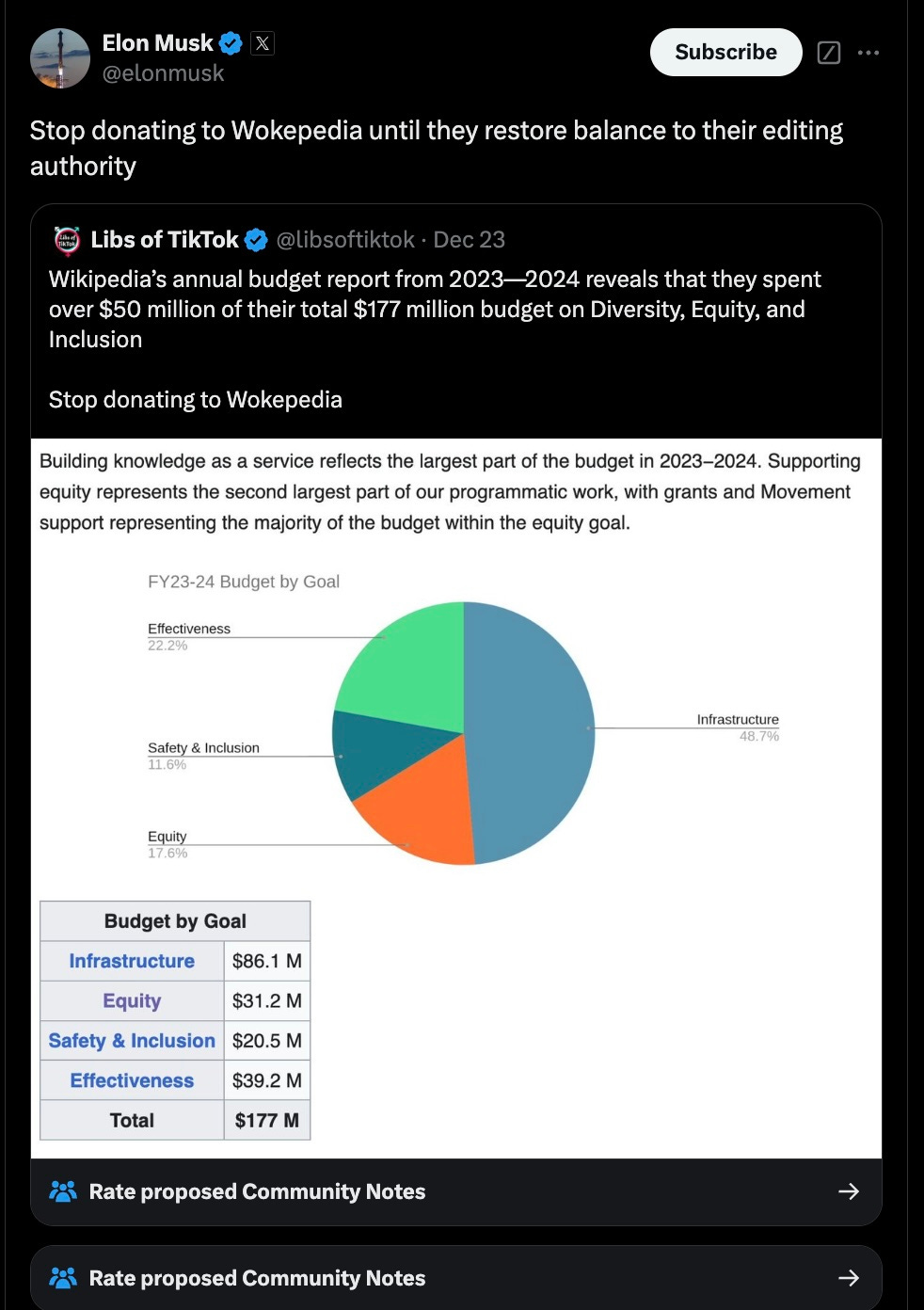

That’s why I was peeved when Elon Musk posted this just before Christmas:

In response I did this. And I hope you will, too. Here’s where to donate.

I was retweeting a post by Stephen B. Harrison, a writer and tech lawyer who’s been blogging about Wikipedia and related issues for years (mainly through Source Notes at Slate) and now has a novel out, called The Editors, which (as he tweeted) features a billionaire who hates the free Internet.

This October 2023 Source Notes post by Harrison on Wikipedia and X and Israel’s Gaza invasion helps explain some of Musk’s wider irritation with the realtime online encyclopedia:

In 2022, Harrison wrote about Musk’s frustrations with Wikipedia’s articles on Musk.

It’s hard to come away from that piece without a sense that the world’s richest man is upset that something online is outside of his control.

A diverse planet’s knowledge bank needs to reflect that diversity

Musk’s most recent “Wokepedia” dig relates to the equity and inclusion portions of Wikimedia Foundation’s 2023-24 financial plan totalling $177 million: Infrastructure, $86.1 million; Equity $31.2 million; Safety & Inclusion, $20.5 million; Effectiveness, $39.2 million.

Here’s a key line from the equity wedge:

We deeply believe that to succeed, we must focus on the knowledge and communities that structures of power and privilege have left out. We cannot serve as the essential infrastructure of the ecosystem of free knowledge for the world without the people of the world working together to assemble and distribute information resources that have value for all. To achieve our vision, we must also raise awareness and build trust with people who we need to help us close our equity gaps.

Wikmedia Foundation’s 2024-25 annual plan includes a related priority that I wholeheartedly agree with:

Knowledge Equity: "advance our world by collecting knowledge that fully represents human diversity."

The neutrality challenge

There’s one more foundational issue to consider: Can Wikipedia sustain its fundamental principle that its output has a “neutral point of view”?

As the Wikimedia Foundation states, “all encyclopedic content on Wikipedia must be written from a neutral point of view (NPOV), which means representing fairly, proportionately, and, as far as possible, without editorial bias, all the significant views that have been published by reliable sources on a topic.”

Earlier this year the libertarian Manhattan Institute published “Is Wikipedia Politically Biased?” - an analysis of a sample of Wikipedia entries about a range of public figures and entities and concluded, “We find a mild to moderate tendency in Wikipedia articles to associate public figures ideologically aligned right-of-center with more negative sentiment than public figures ideologically aligned left-of-center.”

The analysis was done by David Rozado, a New Zealand-based researcher who’s developed a penchant for teasing out and conveying biases of various kinds in online content.

He summarized his findings earlier this year on his Substack,

:Rozado emphasizes that he’s a fan of Wikipedia, and his report concluded this way:

The goal of this report is to foster awareness and encourage a reevaluation of content standards and policies to safeguard the integrity of the information on Wikipedia being consumed by both human readers and AI systems. To address political bias, Wikipedia’s editors could benefit from advanced computational tools designed to highlight potentially biased content. Additionally, collaborative tools similar to X’s Community Notes could also be instrumental in identifying and correcting bias. While acknowledging Wikipedia’s immense contributions to open knowledge, we see its journey towards complete impartiality as ongoing.

I’m sure this is an area where artificial intelligence can indeed help identify hot spots amid the vastness of Wikipedia content that editors can then zoom in on to assess. I’ve invited Rozado to join the Monday chat.

I hope you’ll subscribe to Rozado’s Substack and also Stephen Harrison’s

Substack:And do click back to the Sustain What conversation I did with climate-focused Wikipedia volunteer editors from around the world along with others.

Why Wikipedia works, even for climate

As many of you know, I still use Twitter / X / Xwitter a lot - to learn, to connect people who might otherwise never find each other, to offer observations and course corrections amid the flood. It requires work to avoid the abuse and information pollution, but tell me what’s good in the world that